Commentary: The silent danger of AI

Published in Op Eds

For survivors of human trafficking, domestic violence, and people seeking reproductive care, the threat today isn’t just an abusive person, it’s an algorithm, a data broker, a surveillance system, a phone app.

Take the example of Heather Cornelius. Her husband monitored her every move using smart-phone GPS, shared accounts, and other apps he controlled. When she fled, she spent a night in a parking lot. “He was apologizing … but later he threatened me and the kids,” she said. He poured chemicals in her eyes. She lost her vision in one eye. She regained her freedom but not her privacy.

Her story is not just about physical violence, it’s about how the digital tools meant to help us, like our phones, GPS and smart-home devices, can become weapons in the hands of an abuser. Data that seems trivial — location, voice, login times — become power over someone’s life.

And what of people seeking or those who have had abortions? The physical barrier of a clinic is just the beginning. Now the digital barrier looms.

In one documented case, the phone of Latice Fisher was voluntarily surrendered to police during an investigation. They allegedly found web searches for how to buy misoprostol abortion pills online. Those search records contributed to charges against her of attempted feticide, even though no pills were confirmed to have been taken.

Online pharmacies offering abortion pills by mail have been shown to share sensitive tracking data with third parties like search engines not knowing that this is happening, putting people seeking care at risk. And in Illinois, police allegedly shared automatic license-plate reader data with an out-of-state law enforcement agency seeking a woman who had a legally-obtained an abortion, triggering a formal investigation.

When your period-tracking app, your clinic visit, your device’s GPS, your online search all become traceable data points, what was once a personal decision becomes a digital breadcrumb trail towards danger. The emergence in the last few years of artificial intelligence, or AI, has only exacerbated this and made all this the aggregation of data more rapid and more thorough.

AI doesn’t protect against trauma. It doesn’t understand nuance or fear. It simply digests data, finds patterns and makes predictions. That means a human trafficker or abuser can use location data, smart home logs and app metadata to clandestinely track others. A child’s school uniform photo, online presence and scanned image can be used to identify or exploit them. And authorities can use browser history, app entry or search engine keywords to potentially prosecute people seeking reproductive care.

This is not hypothetical. The data economy thrives on the convenience of apps, trackers and so-called free services. But that convenience comes at the cost of privacy. And for those already vulnerable — survivors, children, people seeking reproductive care — the cost can be life changing.

In response to this danger, we must take some key precautions.

Survivors must have rights to digital erasure: the ability to wipe, anonymize and remove trace data that could be used to harm them. Children must be shielded from systems that treat their data as a goldmine available to predators. Reproductive-care seekers should keep in mind that when they search, travel and use various apps, their digital history may be used against them.

We also need legislation to protect health data privacy, rather than leaving it exposed to corporate or state extraction, by ensuring that personal data like your location, search history or app usage cannot be used to track you, exploit you or prosecute you. Tech companies, data brokers and app developers must be held accountable.

I think of the many women who have fled an abuser, but still live in fear of the phones in their pockets, the devices on their walls, the trackers humming in the cloud. I think of the children whose faces are pixel extracted by an AI model and turned into images by predators who exploit underage children. I think of the person seeking reproductive care by searching the words “abortion clinic,” “pill” or “telehealth,” unaware that each click is a data point that could become a possible indictment.

When AI companies turn our data into a weapon, we are all at risk. We must build a digital world where privacy equals protection, where freedom extends into our devices and where invisible algorithms don’t become invisible cages.

____

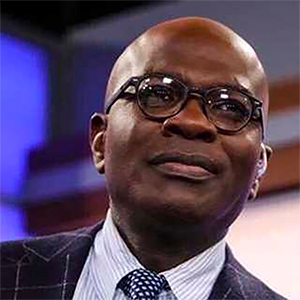

Sylvia Ghazarian is executive director of the Women’s Reproductive Rights Assistance Project and a member of the California Future of Abortion Council. This column was produced for Progressive Perspectives, a project of The Progressive magazine, and distributed by Tribune News Service.

_____

©2026 Tribune Content Agency, LLC.

Comments