Science & Technology

/Knowledge

Editorial: In eco-minded California, there's still no constitutional right to clean air and water

California may be a leader in the fight against climate change, but the state is years, even decades, behind other states when it comes to granting environmental rights to its citizens.

While a handful of other state constitutions, including those of New York and Pennsylvania, declare the people’s rights to clean air, water and a healthy ...Read more

California battery storage increasing rapidly, but not enough to end blackouts, Gov. Newsom says

Gov. Gavin Newsom said Thursday that California continued to rapidly add the battery storage that is crucial to the transition to cleaner energy, but admitted it was still not enough to avoid blackouts during heat waves.

Standing in the middle of a solar farm in Yolo County, Newsom announced the state now had battery storage systems with the ...Read more

Astronauts arrive at Kennedy Space Center as 1st crew for Boeing's Starliner spacecraft

CAPE CANAVERAL, Fla. — It’s not just another ride for a pair of veteran NASA astronauts who arrived to the Space Coast ahead of their flight onboard Boeing’s CST-100 Starliner.

Barry “Butch” Wilmore and Sunita “Suni” Williams, who both joined NASA’s astronaut corps more than two decades ago, will be the commander and pilot for ...Read more

Feds greenlight return of grizzlies to Washington's North Cascades

SEATTLE —Grizzly bears will soon return to the North Cascades.

The National Parks Service and U.S. Fish & Wildlife Service filed a decision Thursday outlining a plan to capture three to seven grizzlies from other ecosystems in the Rocky Mountains or interior British Columbia and release them in the North Cascades each summer for five to 10 ...Read more

Wolf connected to livestock killings could be breeding, wildlife officials say

Wildlife officials said they will not remove a gray wolf potentially connected to recent livestock killings, despite requests from stockgrowers.

Two of the gray wolves reintroduced to Colorado’s Grand County in December — including one suspected in recent depredations — are likely “denning” and in the breeding process, Colorado Parks ...Read more

How bird flu virus fragments get into milk sold in stores, and what the spread of H5N1 in cows means for the dairy industry and milk drinkers

The discovery of viral fragments of avian flu virus in milk sold in U.S. stores suggests that the H5N1 virus may be more widespread in U.S. dairy cattle than previously realized.

The Food and Drug Administration was quick to stress on April 24, 2024, that it believes the commercial milk supply is safe. However, highly pathogenic avian...Read more

Biden administration aims to speed up the demise of coal-fired power plants

Burning coal to generate electricity is rapidly declining in the United States.

President Joe Biden’s administration moved Thursday to speed up the demise of the climate-changing, lung-damaging fossil fuel while attempting to ease the transition to cleaner sources of energy.

A suite of new regulations adopted by the U.S. Environmental ...Read more

Scientists confine, study Chinook at restored Snoqualmie River habitat

FALL CITY, Wash. — In newly restored river channels on the Snoqualmie, baby Chinook salmon are confined in 19 enclosures about the size of large suitcases as they munch on little crustaceans and invertebrate insects floating or swimming by.

What's in the salmon's stomachs, tracked by scientists, could hold clues about the species' survival.

...Read more

EPA says its new strict power plant rules will pass legal tests

WASHINGTON — The EPA on Thursday announced a series of actions to address pollution from fossil fuel power generators, including a final rule for existing coal-fired and new natural gas-fired plants that will eventually require them to capture 90 percent of their carbon dioxide emissions.

The agency said that the rules, which alter some of ...Read more

Large retailers don’t have smokestacks, but they generate a lot of pollution − and states are starting to regulate it

Did you receive a mail-order package this week? Carriers in the U.S. shipped 64 packages for every American in 2022, so it’s quite possible.

That commerce reflects the expansion of large-scale retail in recent decades, especially big-box chains like Walmart, Target, Best Buy and Home Depot that sell goods both in stores and online. ...Read more

Sharks 'adapting their movements and routines,' great white researchers discover

Do great white sharks change their behaviors in different environments, or do the apex predators follow the same routines regardless of location?

Researchers recently set out to solve this shark puzzle, as they reportedly discovered great white behaviors by attaching smart tags and cameras to their fins.

Great whites adapt their movements and ...Read more

Health care is a tough arena for AI to make a difference

It was a warp-speed tour of what's happening with artificial intelligence in medicine.

Chris Manrodt, an R&D manager for Philips' medical imaging business in Plymouth, Minnesota, last Friday gave a presentation to several hundred Twin Cities software developers and health care executives and then declared, "I feel like I've said about 50 ...Read more

How trains linked rival port cities along the US East Coast into a cultural and economic megalopolis

The Northeast corridor is America’s busiest rail line. Each day, its trains deliver 800,000 passengers to Boston, New York, Philadelphia, Washington and points in between.

The Northeast corridor is also a name for the place those trains serve: the coastal plain stretching from Virginia to Massachusetts, where over 17% of the country...Read more

‘Dune: Awakening’ takes fans to Arrakis and forces them to survive a wasteland

“Dune” may not seem like a natural setting for a survival MMO. Players explore the desert planet of Arrakis and resources appear scarce, but the developers at Funcom are huge fans of Frank Herbert’s work and heavily influenced by director Denis Villeneuve’s films. They know there’s more under the surface, and the team led by creative...Read more

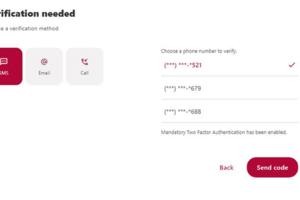

Jim Rossman: Helping mom secure her online banking

I spent a recent weekend visiting with my mom, and she had a rather short list of technical things she needed my help with on this trip.

The first was easy – she has a new Wyze doorbell and it had lost its connection to her Amazon Echo.

When I set it up, her Echo Dot would announce when someone was ringing her doorbell. The Echo would also...Read more

Gadgets: Make your phone truly hands-free

Ohsnap's Snap 4 Luxe is a multifunctional accessory that truly makes smartphones hands-free. It attaches to the back of a phone and is ready to stick to any metal surface around your house or office. With the accompanying Snapmount 2.0 wall mount, it can be put anywhere.

While Apple MagSafe comes to mind, it is not limited to just that ...Read more

‘Bitcraft: Age of Automata’ wants players to rebuild civilization from the ground up

The developers behind “Bitcraft: Age of Automata” want you to build the world from the ground up. Villages, towns, cities, nations and empires are all in players hands. That’s how Clockwork Labs envisions for its ambitious sandbox massively multiplayer online game.

Players start with primitive tools. Like other survival games, they’ll...Read more

Illinois residents encouraged to destroy the eggs of invasive insects to slow spread

CHICAGO — While Chicagoans were alarmed to learn the spotted lanternfly had been found in Illinois last year, experts say spring is the time to take action against that insect — as well as another damaging invasive species that has made far more inroads and gotten less attention.

The spongy moth, formerly known as the gypsy moth, has been ...Read more

Steelhead trout, once thriving in Southern California, are declared endangered

Southern California’s rivers and creeks once teemed with large, silvery fish that arrived from the ocean and swam upstream to spawn. But today, these fish are seldom seen.

Southern California steelhead trout have been pushed to the brink of extinction as their river habitats have been altered by development and fragmented by barriers and dams...Read more

Climate change supercharged a heat dome, intensifying 2021 fire season, study finds

As a massive heat dome lingered over the Pacific Northwest three years ago, swaths of North America simmered— and then burned. Wildfires charred more than 18.5 million acres across the continent, with the most land burned in Canada and California.

A new study has revealed the extent to which human-caused climate change intensified the ...Read more

Popular Stories

- Feds greenlight return of grizzlies to Washington's North Cascades

- How bird flu virus fragments get into milk sold in stores, and what the spread of H5N1 in cows means for the dairy industry and milk drinkers

- Wolf connected to livestock killings could be breeding, wildlife officials say

- Astronauts arrive at Kennedy Space Center as 1st crew for Boeing's Starliner spacecraft

- California battery storage increasing rapidly, but not enough to end blackouts, Gov. Newsom says